Surface Chemical Analysis: Core Concepts, Techniques, and Applications in Drug Development

This article provides a comprehensive overview of surface chemical analysis, a critical field for understanding material interfaces at the atomic and molecular level.

Surface Chemical Analysis: Core Concepts, Techniques, and Applications in Drug Development

Abstract

This article provides a comprehensive overview of surface chemical analysis, a critical field for understanding material interfaces at the atomic and molecular level. Tailored for researchers, scientists, and drug development professionals, it covers foundational terminology from international standards like ISO 18115, explores major spectroscopic and mass spectrometry techniques (XPS, AES, SIMS), and addresses their specific applications in characterizing nanoparticles, drug delivery systems, and biomaterials. The content also delves into common troubleshooting challenges, data optimization strategies, and the importance of methodological validation and comparative analysis to ensure data reliability in biomedical research and quality control.

What is Surface Chemical Analysis? Core Concepts and Terminology

In both materials science and biology, the surface represents the critical interface where a material interacts with its environment, dictating key properties and functions. Technically, for solid matter, this is defined as the outermost surface number atomic layer, an extremely shallow region whose chemical structure governs characteristics such as chemical activity, adhesion, wetness, electrical properties, corrosion-resistance, and biocompatibility [1]. In biological systems, for instance, the cell surface is fundamental to processes like adhesion and communication. The analysis of this delicate interface requires specialized techniques because the surface's unique chemistry can be lost or altered by environmental degradation, contamination, or even the sample preparation process itself [2] [1]. This guide provides an in-depth technical framework for understanding and analyzing this critical interface, placing core concepts and definitions within the broader context of surface chemical analysis research.

Core Surface Analysis Techniques

Surface analysis techniques function by stimulating the surface with photons, electrons, or ions in an ultra-high vacuum environment (with a pressure one billionth of atmospheric pressure or lower) to reduce measurement interference [1]. The emitted particles, such as electrons or ions, are then analyzed to reveal the surface's elemental composition and chemical bonding states.

Major Analytical Techniques

The main surface analysis techniques include X-ray Photoelectron Spectroscopy (XPS), Time-of-Flight Secondary Ion Mass Spectrometry (TOF-SIMS), and Auger Electron Spectroscopy (AES), each with distinct principles and applications [1].

X-ray Photoelectron Spectroscopy (XPS): This technique uses X-rays to irradiate the sample, generating photoelectrons via the photoelectric effect. The kinetic energy of these emitted photoelectrons is analyzed to determine the surface composition and chemical-bonding states. XPS is highly versatile and can be used for the surface analysis of both organic and inorganic materials [1]. Its capabilities can be extended through several related approaches:

- Small-Area XPS (SAXPS): Focuses on analyzing small features on a solid surface, such as particles or blemishes, by maximizing the signal from a specific area while minimizing contribution from the surroundings [3].

- XPS Depth Profiling: Uses an ion beam to controllably remove material, allowing for the examination of composition changes from the surface to the bulk. This is invaluable for studying corrosion, surface oxidation, and interface chemistry [3].

- Angle-Resolved XPS (ARXPS): Collects photoelectrons at varying emission angles, enabling non-destructive depth profiling of ultra-thin films on the nanometer scale [3].

Time-of-Flight Secondary Ion Mass Spectrometry (TOF-SIMS): TOF-SIMS involves irradiating the surface with high-speed ions and analyzing the secondary ions emitted from the surface. It is characterized by extremely high surface sensitivity and the ability to provide molecular mass information for organic compounds, as well as high-sensitivity inorganic element analysis. It is particularly powerful for mapping the distribution of organic matter on surfaces [1].

Auger Electron Spectroscopy (AES): In AES, a focused electron beam excites the sample, and the generated Auger electrons are observed for qualitative and quantitative surface analysis. A key strength of AES is its extremely high spatial resolution compared to other surface analysis methods, making it ideal for observing metal and semiconductor surfaces and analyzing micro-level foreign substances [3] [1].

Complementary and Supporting Techniques

Other techniques provide complementary information that, when combined with the primary methods, create a comprehensive surface profile [3].

- Ion Scattering Spectroscopy (ISS): Also known as Low-Energy Ion Scattering (LEIS), this is a highly surface-sensitive technique that probes the elemental composition of the first atomic layer of a surface. A beam of noble gas ions scatters from the surface, and the kinetic energy of the scattered ions is measured to determine the mass of the surface atoms. It is valuable for studying surface segregation and layer growth [3].

- Reflected Electron Energy Loss Spectroscopy (REELS): REELS probes the electronic structure of a material's surface. An incident electron beam scatters from the sample, and the energy losses in the scattered electrons—resulting from electronic transitions in the sample—are measured. REELS can measure properties like electronic band gaps and, unlike XPS, can sometimes detect hydrogen [3].

- UV Photoelectron Spectroscopy (UPS): Similar to XPS, UPS uses UV photons instead of X-rays to excite photoelectrons. As UV photons have lower energy, the detected photoelectrons originate from lower binding energy levels involved in bonding, providing a "fingerprint" for compounds and information on the highest energy occupied bonding states [3].

Table 1: Comparison of Major Surface Analysis Techniques

| Technique | Primary Excitation | Detected Signal | Key Information | Primary Applications |

|---|---|---|---|---|

| XPS (X-ray Photoelectron Spectroscopy) | X-rays | Photoelectrons | Elemental composition, chemical bonding states | Analysis of various materials (organic/inorganic), surface chemistry, thin films [3] [1] |

| TOF-SIMS (Time-of-Flight SIMS) | High-speed ions | Secondary Ions | Molecular structure, elemental distribution, extreme surface sensitivity | Mapping organic distribution, surface contamination, segregation studies [1] |

| AES (Auger Electron Spectroscopy) | Electron beam | Auger electrons | Elemental composition, high-resolution mapping | Micro-analysis of foreign substances, metal/semiconductor surfaces [3] [1] |

| ISS (Ion Scattering Spectroscopy) | Noble gas ions | Scattered ions | Elemental composition of the first atomic layer | Surface segregation, layer growth studies [3] |

Quantitative Data in Surface Analysis

Quantitative data in surface analysis involves summarizing measurements to understand the distribution and relationships within the data. This often involves creating frequency tables and histograms to visualize the distribution of a variable [4].

Presenting Quantitative Data

A frequency table collates data into exhaustive and mutually exclusive intervals ('bins'). For continuous data, which is always rounded, bins must be carefully constructed to avoid ambiguity, often by defining boundaries to one more decimal place than the raw data [4]. A histogram is a graphical representation of a frequency table, where the width of a bar represents an interval of values, and the height represents the number or percentage of observations within that range [4]. The choice of bin size and boundaries can substantially change the histogram's appearance, and software tools allow for experimentation to find the most informative view of the data's distribution, including its shape, average, variation, and any unusual features like outliers [4].

Table 2: Example Frequency Table for Continuous Data: Baby Birth Weights [4]

| Weight Group (kg) | Alternative Weight Group (kg) | Number of Babies | Percentage of Babies |

|---|---|---|---|

| 1.5 to under 2.0 | 1.45 to 1.95 | 1 | 2% |

| 2.0 to under 2.5 | 1.95 to 2.45 | 4 | 9% |

| 2.5 to under 3.0 | 2.45 to 2.95 | 4 | 9% |

| 3.0 to under 3.5 | 2.95 to 3.45 | 17 | 39% |

| 3.5 to under 4.0 | 3.45 to 3.95 | 17 | 39% |

| 4.0 to under 4.5 | 3.95 to 4.45 | 1 | 2% |

Experimental Protocols and Methodologies

Rigorous experimental protocols are essential for obtaining reliable surface analysis data, particularly because sample preparation can severely alter the very surface properties being measured.

Cell Surface Analysis Protocol

A systematic investigation on model organisms highlights the profound effects of cell preparation protocols on surface properties [2]. The following methodology outlines key steps and considerations.

- Cell Cultivation: Grow model organisms under defined conditions. For example, use carbon- and nitrogen-limited Psychrobacter sp. strain SW8 (as a glycocalyx-bearing model), Escherichia coli (a gram-negative model without a glycocalyx), and Staphylococcus epidermidis (a gram-positive model without a glycocalyx) [2].

- Cell Manipulation Procedures: Subject harvested cells to various common manipulation procedures to assess their impact. Critical procedures to test include:

- Centrifugation: Centrifuge at different speeds, including high speeds (e.g., 15,000 x g).

- Washing/Resuspension: Wash cells and perform final resuspension using media with different ionic strengths (e.g., high-salt solutions vs. low-salt buffers).

- Desiccation: Air-dry cells for contact angle measurements or freeze-dry for sensitive spectroscopic analysis like XPS.

- Hydrocarbon Contact: Place cells in contact with a hydrocarbon (e.g., hexadecane) for hydrophobicity assays [2].

- Analysis of Surface Properties: After each manipulation procedure, analyze the cells using multiple techniques to assess changes in surface properties. Key analyses include:

- Physicochemical Properties: Measure electrophoretic mobility, adhesion to solid substrata, and affinity to various Sepharose columns.

- Structural Integrity and Viability: Check for structural disruption and assess cell viability/culturability [2].

- Data Interpretation: Compare the values obtained for surface parameters across the different preparation methods. Note that methods allegedly measuring similar properties (e.g., different hydrophobicity assays) often do not correlate, underscoring the need for method validation for each microorganism [2].

XPS Depth Profiling Protocol

Depth profiling is a fundamental technique for understanding the composition of a material as a function of depth.

- Sample Preparation: The solid sample is mounted on a suitable holder and introduced into the ultra-high vacuum (UHV) chamber of the XPS instrument. Charge compensation for insulating samples is critical and is achieved by supplying electrons from an external source to neutralize positive surface charge [3].

- Sputter-Etch Cycle: Material is controllably removed using an ion beam. The ion source must be selected based on the material:

- Monatomic Ions: Traditional sources used for hard materials.

- Gas Cluster Ions: Essential for profiling softer materials (e.g., organics, polymers) that would be damaged by monatomic ions, thereby enabling the analysis of previously inaccessible material classes [3].

- Data Acquisition and Analysis: Following each sputter-etch cycle, XPS data is collected from the newly uncovered surface. This process is repeated multiple times to build a high-resolution composition profile from the surface to the bulk [3].

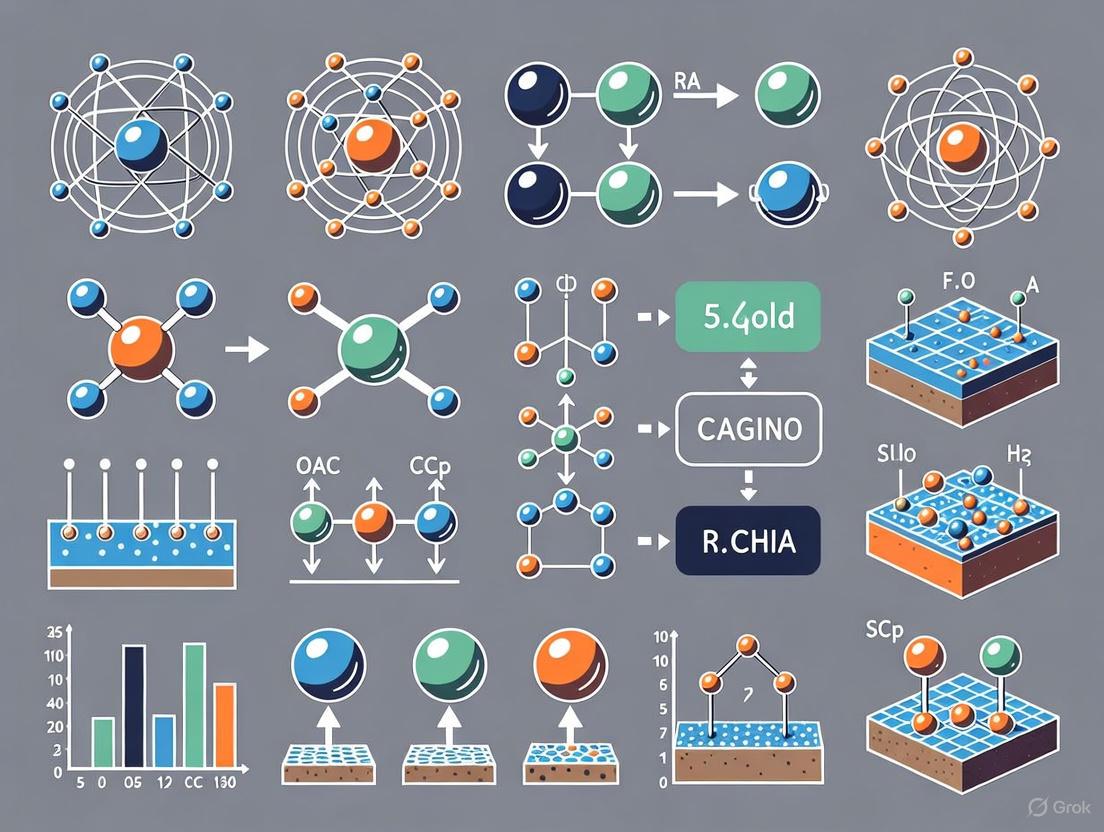

The workflow for a comprehensive surface analysis project, from sample preparation to data synthesis, can be visualized as follows:

Workflow for Surface Analysis

The Scientist's Toolkit: Research Reagent Solutions

Successful surface analysis relies on a suite of essential materials and tools. The following table details key research reagent solutions used in the field.

Table 3: Essential Research Reagent Solutions for Surface Analysis

| Item / Reagent | Function / Purpose | Technical Considerations |

|---|---|---|

| Monatomic Ion Source (e.g., Ar⁺) | Used for sputter etching and depth profiling of hard materials (metals, inorganic semiconductors). | Can cause damage to soft materials and organic surfaces, leading to misinterpretation of data [3]. |

| Gas Cluster Ion Source (e.g., Arₙ⁺) | Enables depth profiling of soft, fragile, and organic materials (polymers, biologics) by distributing sputtering energy. | Reduces damage; essential for analyzing materials previously inaccessible to XPS depth profiling [3]. |

| Charge Neutralization Flood Gun | Supplies low-energy electrons to the surface of electrically insulating samples to counteract positive charge buildup from X-ray irradiation. | Prevents peak shifting and broadening in XPS spectra, which is critical for accurate binding energy measurement [3]. |

| Specific Resuspension Media | Used to wash and resuspend biological cells without altering their native surface properties for analysis. | The type of medium (e.g., high-salt vs. low-salt buffers) strongly influences measured cell surface parameters [2]. |

| Ultra-High Vacuum (UHV) Environment | The required operational environment for surface analyzers (pressure ≤ 10⁻⁹ atm). | Minimizes atmospheric contamination and scattering of signal electrons/ions, allowing accurate detection [1]. |

Advanced Applications and Correlative Workflows

Advanced applications often combine multiple techniques to leverage their respective strengths, creating a more complete picture of the surface than any single method could provide.

- Correlative Imaging and Surface Analysis (CISA) Workflow: This integrated approach bridges the gap between high-resolution imagery and detailed surface chemistry. While Scanning Electron Microscopy (SEM) with Energy-Dispersive X-ray spectroscopy (EDX) provides high-resolution imagery and composition information, it may not reveal crucial surface chemistry. Conversely, XPS offers detailed surface chemistry but may lack the high-resolution imagery to explain the interplay between chemistry and structure. The CISA Workflow integrates datasets from both XPS and SEM instruments, enabling comprehensive sample understanding [3].

- Hard X-ray Photoelectron Spectroscopy (HAXPES): Employing higher energy X-ray sources (e.g., synchrotron radiation) than the standard Al K-alpha source enhances XPS analysis. The higher photon energy increases the inelastic mean free path of electrons, enabling analysis from deeper regions (several tens of nanometers). It also allows access to core levels that are otherwise inaccessible and can be combined with X-ray induced Auger features to generate Wagner plots for interpreting chemical states [3].

- Raman Spectroscopy in Surface Analysis: While providing a larger depth of analysis than techniques like XPS, Raman spectroscopy offers complementary insights into molecular bonding. It is highly sensitive to structural changes and is particularly valuable for understanding polymers (where bulk and surface information are complementary) and nanomaterials like graphene and carbon nanotubes [3].

The relationships and data outputs between the primary surface analysis techniques and their complementary partners are illustrated below.

Technique Relationships & Data Outputs

A comprehensive understanding of the surface—the critical interface in materials and biology—requires the optimal utilization of a suite of surface analysis techniques. From the elemental and chemical state information provided by XPS to the high-sensitivity molecular mapping of TOF-SIMS and the high spatial resolution of AES, each method contributes a unique piece to the puzzle. The insights gained are powerful, enabling advancements in semiconductor technology, biomaterials, drug development, and countless other fields. However, these insights are entirely dependent on rigorous methodology, from sample preparation that preserves the native surface state to the intelligent application of correlative workflows that combine multiple analytical approaches. As materials and biological questions become increasingly complex, the continued development and sophisticated application of these surface analysis concepts will remain at the forefront of innovation.

Standardized terminology serves as the fundamental bedrock of scientific progress, enabling unambiguous communication, ensuring data reproducibility, and facilitating global collaboration across diverse fields of research. In the specialized domain of surface chemical analysis, where techniques like X-ray photoelectron spectroscopy (XPS) and secondary ion mass spectrometry (SIMS) provide critical material characterization data, the consistent use of defined terms is particularly crucial. The International Organization for Standardization (ISO) and the International Union of Pure and Applied Chemistry (IUPAC) have emerged as the preeminent authorities developing and maintaining these vital terminological standards.

This technical guide examines the complementary roles of ISO 18115 for surface chemical analysis vocabulary and IUPAC's nomenclature systems for chemical compounds. These frameworks provide the necessary linguistic infrastructure for researchers, scientists, and drug development professionals to communicate findings with precision, particularly within the context of advanced materials characterization and pharmaceutical development. The adoption of these standards directly addresses challenges in interdisciplinary research, where consistent terminology prevents misinterpretation of analytical data, thereby strengthening the validity of scientific conclusions.

The ISO 18115 Standard for Surface Chemical Analysis

Scope and Development

ISO 18115, "Surface chemical analysis — Vocabulary," is a comprehensive international standard that provides definitive explanations for terms used in surface analytical techniques. The standard is divided into two distinct parts: Part 1 covers general terms and those used in spectroscopy [5] [6], while Part 2 focuses specifically on terminology related to scanning-probe microscopy [5]. This partitioning reflects the specialized nature of the field and allows for more targeted referencing by practitioners.

The standard represents a dynamic document that undergoes periodic revision to reflect technological advancements. The 2001 version was subsequently revised and expanded, with Part 1 updated to its 2013 edition [5] [6]. This evolution ensures the vocabulary remains current with emerging methodologies and instrumental developments in surface science.

Technical Coverage and Application

ISO 18115 provides an extensive lexicon of approximately 900 terms essential for the accurate description and interpretation of surface analysis data [5]. This vocabulary spans multiple spectroscopic techniques, including Auger electron spectroscopy (AES), secondary ion mass spectrometry (SIMS), X-ray photoelectron spectroscopy (XPS), and various forms of microscopy such as atomic force microscopy (AFM) and scanning tunnelling microscopy (STM) [5] [6].

For researchers in drug development, this standardization is particularly valuable when characterizing the surface properties of pharmaceutical materials, where consistency in terms like "analysis area," "information depth," and "lateral resolution" ensures reliable communication of methodological details and results across international collaborations. The standard also includes definitions for 52 acronyms, addressing the potential confusion that can arise from the prolific use of abbreviations in technical literature [6].

Table: Key Components of ISO 18115 Standard for Surface Chemical Analysis

| Component | Description | Techniques Covered | Number of Terms |

|---|---|---|---|

| Part 1: General Terms & Spectroscopy | Defines general concepts and terms used in spectroscopic methods | AES, XPS, SIMS, UPS, REELS | 548 terms [6] |

| Part 2: Scanning-Probe Microscopy | Focuses on terminology for probe-based imaging techniques | AFM, STM, SNOM | Remaining 352 terms (approximate) [5] |

| Acronym Definitions | Standardized explanations for common abbreviations | Across all covered techniques | 52 acronyms [6] |

IUPAC Nomenclature Systems

Authority and Governance

The International Union of Pure and Applied Chemistry (IUPAC) serves as the universally recognized authority on chemical nomenclature and terminology [7] [8]. Two primary IUPAC bodies oversee this work: Division VIII – Chemical Nomenclature and Structure Representation and the Interdivisional Committee on Terminology, Nomenclature, and Symbols [7] [8]. These groups develop comprehensive recommendations to establish "unambiguous, uniform, and consistent nomenclature and terminology" across chemical disciplines [7].

IUPAC's nomenclature recommendations are published in its journal, Pure and Applied Chemistry (PAC), and are compiled into the well-known IUPAC Color Books (e.g., the Blue Book for organic chemistry, the Red Book for inorganic chemistry, and the Purple Book for polymers) [9] [8]. For broader accessibility, IUPAC also publishes "Brief Guides" that summarize key nomenclature principles for different chemical domains [9].

Organic Chemistry Nomenclature Systems

IUPAC has established multiple complementary approaches for naming organic compounds, each suited to different structural requirements:

Substitutive Nomenclature: This is the most widely used system, based on identifying a principal functional group that is designated as a suffix to the name of the parent carbon skeleton, with other substituents added as prefixes [8]. The selection of the principal group follows a strict priority list established by IUPAC.

Radicofunctional Nomenclature: In this approach, functional classes are named as the main group without suffixes, while the remainder of the molecule is treated as one or more radicals [8].

Additive Nomenclature: Used for naming structures where atoms have been added to a parent framework, indicated by prefixes such as "hydro-" for hydrogen addition [8].

Subtractive Nomenclature: The inverse of additive nomenclature, using prefixes like "dehydro-" to indicate removal of atoms from a parent structure [8]. This system finds particular application in natural products chemistry.

Replacement Nomenclature: Allows specification of carbon chain positions where carbon atoms are replaced by heteroatoms, permitted when it "allows for a significant simplification" of the systematic name [8].

Table: IUPAC Nomenclature Systems for Organic Chemistry

| Nomenclature System | Fundamental Principle | Common Applications | Example Prefixes/Suffixes |

|---|---|---|---|

| Substitutive | Replacement of hydrogen atoms by functional groups | Most organic compounds with functional groups | -ol (alcohols), -one (ketones), bromo- (halogens) |

| Radicofunctional | Functional classes as main group with radical components | Simple ethers, amines | alkyl ether, alkyl amine |

| Additive | Addition of atoms to parent structure | Hydrogenation products | hydro- |

| Subtractive | Removal of atoms from parent structure | Dehydrogenated compounds, natural products | dehydro-, nor- |

| Replacement | Replacement of carbon atoms by heteroatoms | Heterocyclic compounds, polyethylene glycols | oxa- (oxygen), aza- (nitrogen) |

Typographic and Capitalization Rules

IUPAC nomenclature includes specific typographic conventions that ensure clarity and consistency in chemical communication [8]:

Italics: Used for stereochemical descriptors (cis, trans, R, S), the letters o, m, p (for ortho, meta, para), element symbols indicating substitution sites (N-benzyl, O-acetyl), and the symbol H when marking hydrogen position (3H-pyrrole) [8].

Capitalization: In systematic names, the first letter of the main part of the name is capitalized when required (e.g., at the beginning of a sentence), while prefixes such as sec, tert, ortho, meta, para, and locants are not considered part of the main name [8]. However, prefixes like "cyclo," "iso," "neo," or "spiro" are considered part of the main name and are capitalized accordingly [8].

Vowel Elision: Systematic application of vowel elision rules avoids awkward double vowels, such as the elision of "a" before another vowel in heterocyclic names ("tetrazole" instead of "tetraazole") or before "a" or "o" in multiplicative prefixes ("pentoxyde" instead of "pentaoxyde") [8].

Methodologies and Experimental Protocols

Workflow for Applying Standardized Terminology in Surface Analysis

The following diagram illustrates the systematic process for applying standardized terminology in surface analysis experiments, integrating both ISO and IUPAC guidelines:

This workflow demonstrates how standardized terminology integrates throughout the experimental process, from initial sample characterization using IUPAC nomenclature for precise chemical identification, through technique-specific parameter definition using ISO vocabulary, to final reporting that combines both systems for comprehensive scientific communication.

Interrelationship Between Terminology Systems

The relationship between IUPAC chemical nomenclature and ISO surface analysis terminology represents a complementary framework essential for complete materials characterization:

This complementary relationship shows how IUPAC nomenclature defines chemical identity (what is being analyzed), while ISO 18115 vocabulary defines analytical methodology (how it is characterized). Together, they enable a complete material description that is essential for reproducible research, particularly in pharmaceutical development where both composition and surface properties determine material behavior.

Essential Research Reagent Solutions

The following table details key reagents and materials referenced in surface analysis research, with their standardized functions according to established terminology:

Table: Essential Research Reagents and Materials for Surface Analysis

| Reagent/Material | Standardized Function | Application Context |

|---|---|---|

| Zeolites | Porous molecular sieves for size-exclusion separation and catalysis | Used as reference materials in surface area analysis; modeling confined chemical environments [10] |

| Metal-Organic Frameworks (MOFs) | Coordination polymers with tunable porosity for gas storage and separation | Model systems for studying surface adsorption phenomena; reference materials for pore size distribution [10] |

| Porous Coordination Polymers (PCPs) | Metal-organic frameworks with specific coordination geometries | Comparative studies with zeolites for understanding confinement effects [10] |

| Reference Standard Samples | Certified materials with known composition for instrument calibration | Essential for quantitative surface analysis (XPS, AES, SIMS) according to ISO guidelines [5] [6] |

Implications for Pharmaceutical Research and Development

The integration of ISO 18115 and IUPAC nomenclature systems creates a robust framework for pharmaceutical development, where precise material characterization is critical for regulatory approval and quality control. Drug development professionals benefit from this terminological standardization through enhanced reproducibility in excipient characterization, active pharmaceutical ingredient (API) surface analysis, and consistent reporting of analytical methods in regulatory submissions.

In practice, the combined application of these standards ensures that surface properties of pharmaceutical materials—which directly influence dissolution, stability, and bioavailability—are characterized and communicated with sufficient precision to enable technology transfer between research, development, and manufacturing facilities. This is particularly crucial for complex drug delivery systems where surface chemistry governs drug release profiles and biological interactions.

Standardized terminology, as exemplified by ISO 18115 for surface chemical analysis and IUPAC guidelines for chemical nomenclature, provides an indispensable foundation for scientific and technological advancement. These complementary systems enable researchers to communicate with the precision necessary for reproducible research, effective collaboration, and reliable knowledge transfer across disciplines and geographic boundaries. For the field of surface chemical analysis concepts and definitions, continued adherence to and development of these standards remains crucial for addressing emerging characterization challenges in materials science and pharmaceutical development.

Surface analysis is a critical discipline in materials science, chemistry, and biomedicine, as the surface represents the unique interface between a material and its environment where crucial interactions occur. The primary challenge in surface analysis stems from the minute mass of material at the surface region compared to the bulk. For a 1 cm² sample with approximately 10¹⁵ atoms in the surface layer, detecting impurities at the 1% level requires sensitivity to about 10¹³ atoms. This level of detection contrasts sharply with bulk analysis techniques, where the same number of molecules in a 1 cm³ liquid sample (≈10²² molecules) would require one part-per-billion (ppb) sensitivity—a level few techniques can achieve. This discrepancy explains why common spectroscopic techniques like NMR (detection limit ≈10¹⁹ molecules) are unsuitable for surface studies except on high-surface-area samples [11].

The fundamental distinction in surface analysis lies in differentiating between surface-sensitive and surface-specific techniques. A surface-sensitive technique is more sensitive to atoms located near the surface than to those in the bulk, meaning the main signal originates from the surface region. In contrast, a truly surface-specific technique should, in principle, only yield signals from the surface region, though this depends heavily on how "surface region" is defined. Most practical techniques fall into the surface-sensitive category, where while most signal comes from within a few atomic layers of the surface, a small portion may originate deeper in the solid [11]. The extreme sensitivity of surface regions means that proper sample handling is paramount, as exposure to air can deposit hydrocarbon films, and even brief contact can transfer salts and oils that significantly alter surface composition [12].

Fundamental Principles of Sampling Depth and Surface Sensitivity

Physical Basis for Surface Sensitivity

The surface sensitivity of analytical techniques primarily depends on the short inelastic mean free path (IMFP) of low-energy electrons in solids. When electrons with energies between 20-1000 eV travel through a material, they undergo inelastic scattering after traveling very short distances (typically 0.5-3 nm), which corresponds to just a few atomic monolayers [11]. This limited travel distance means that only electrons generated near the surface can escape without energy loss and be detected, making techniques that detect these electrons inherently surface-sensitive.

The relationship between electron energy and IMFP follows a universal curve, where the IMFP reaches a minimum for electrons with kinetic energies around 50-100 eV. This fundamental physical principle is exploited by electron spectroscopic techniques such as X-ray Photoelectron Spectroscopy (XPS) and Auger Electron Spectroscopy (AES), which both rely on measuring the energy of electrons emitted from the top 1-10 nm of a material [13]. The sampling depth (d) is often defined as three times the IMFP (λ), as this distance corresponds to the depth from which 95% of the detected signal originates, following the relationship: I = I₀e^(-z/λ), where I is the intensity from depth z, and I₀ is the intensity from the surface [11].

Achieving Surface Specificity Through Experimental Design

Beyond the inherent surface sensitivity provided by electron IMFP, certain techniques achieve surface specificity through specialized experimental designs and selection rules:

Grazing Incidence Geometry: Techniques like Reflection-Absorption Infrared Spectroscopy (RAIRS) use grazing incidence angles (typically 80°-88° from surface normal) to maximize surface sensitivity. In RAIRS, a surface selection rule applies where only vibrations with dipole moments perpendicular to the metal surface are IR-active, providing enhanced molecular orientation information [13].

Evanescent Wave Sensing: Attenuated Total Reflectance (ATR) spectroscopy utilizes the evanescent wave that penetrates 0.5-2 μm into a sample in contact with a high-refractive-index crystal, providing surface-sensitive information with minimal sample preparation [13].

Plasmonic Enhancement: Surface-Enhanced Raman Spectroscopy (SERS) achieves extraordinary sensitivity (with enhancement factors of 10¹⁰-10¹¹) through localized surface plasmon resonance of metal nanostructures, enabling even single-molecule detection by dramatically amplifying signals from molecules adsorbed on rough metal surfaces [13].

Sputtering Mechanisms: Secondary Ion Mass Spectrometry (SIMS) techniques use primary ion beams to sputter secondary ions from only the top 1-3 monolayers of a surface, providing exceptional surface sensitivity with sampling depths below 1 nm in static mode [14].

The following diagram illustrates the fundamental concept of how surface sensitivity is achieved in electron spectroscopy techniques through the limited escape depth of electrons:

Figure 1: Fundamental principle of surface sensitivity in electron spectroscopy techniques, where the limited escape depth of electrons ensures detected signal originates primarily from surface regions.

Comparative Analysis of Surface Analytical Techniques

Electron Spectroscopy Techniques

X-ray Photoelectron Spectroscopy (XPS) operates by irradiating a sample with X-rays (typically Al Kα at 1486.6 eV or Mg Kα at 1253.6 eV) that eject core electrons from atoms. The kinetic energy of these emitted photoelectrons is measured and related to their binding energy through Einstein's photoelectric equation. XPS provides exceptional surface sensitivity with a sampling depth of 1-10 nm, making it highly effective for determining elemental composition, oxidation states, and chemical environments. The technique requires ultra-high vacuum (UHV) conditions to minimize surface contamination and is widely applied in materials science, catalysis, and semiconductor research. One significant advantage of XPS is its ability to detect all elements except hydrogen and helium, with detection limits typically ranging from 0.1-1 atomic percent [13] [15].

Auger Electron Spectroscopy (AES) employs a focused electron beam to excite core electrons, initiating a process where an outer-shell electron fills the core hole, releasing energy that ejects another outer-shell electron (the Auger electron). The kinetic energy of these Auger electrons is characteristic of specific elements. AES offers excellent spatial resolution at the nanometer scale for surface mapping and is particularly effective for light elements (atomic number Z < 20) due to their higher Auger yield. With a sampling depth similar to XPS (3-10 nm), AES is commonly integrated with scanning electron microscopy (SEM) and finds applications in thin film analysis, corrosion studies, and electronics quality control. Its detection limits also range from 0.1-1 atomic percent [13] [15].

Low-Energy Electron Diffraction (LEED) utilizes low-energy electrons (20-200 eV) to probe surface structure and crystallography. These electrons interact with surface atoms to produce diffraction patterns that reveal surface periodicity and symmetry. LEED requires UHV conditions and provides information about surface reconstruction, adsorbate ordering, atomic positions, and bond lengths. It serves as a valuable complement to other surface techniques like scanning tunneling microscopy (STM) and is particularly useful for studying surface phase transitions and epitaxial growth [13].

Mass Spectrometry Techniques

Secondary Ion Mass Spectrometry (SIMS) and its variant Time-of-Flight SIMS (ToF-SIMS) are exceptionally surface-sensitive techniques based on bombarding a sample with a primary ion beam (Cs⁺, O₂⁺, Ar⁺, Ga⁺, or Au⁺) that sputters secondary ions from the top 1-2 nm of the surface [14]. These secondary ions are then mass-analyzed to provide information about surface composition. ToF-SIMS, which uses a pulsed ion beam, operates in static mode where the total ion dose is kept low enough to avoid significant surface damage, making it ideal for analyzing the outermost surface layers. The technique offers outstanding detection limits ranging from ppm to ppb and can detect all elements, including hydrogen, plus molecular species. ToF-SIMS can provide mass spectra, ion images with sub-micron spatial resolution, and depth profiles. However, quantitative analysis remains challenging without extensive calibration, and samples must be vacuum-compatible [13] [14].

The distinction between static SIMS (SSIMS) and dynamic SIMS is crucial for understanding sampling depth. Static SIMS uses low primary ion doses (<10¹³ ions/cm²) to preserve the chemical integrity of the first monolayer during analysis, while dynamic SIMS uses higher ion doses to progressively remove layers for depth profiling. ToF-SIMS excels at providing molecular information from organic and biological surfaces, with applications in contaminant identification, failure analysis, and surface characterization of both conductive and insulating materials [14].

Vibrational Spectroscopy Techniques

Surface-Enhanced Raman Spectroscopy (SERS) dramatically amplifies Raman scattering signals from molecules adsorbed on nanostructured metal surfaces through electromagnetic enhancement (localized surface plasmon resonance) and chemical enhancement (charge transfer processes). With enhancement factors reaching 10¹⁰-10¹¹, SERS enables single-molecule detection and provides high sensitivity and molecular specificity for surface analysis. Common substrates include silver, gold, and copper nanoparticles, with applications in biosensing, trace analysis, and in situ monitoring of surface reactions [13].

Attenuated Total Reflectance (ATR) Spectroscopy is a non-destructive sampling technique where IR radiation undergoes total internal reflection in a high-refractive-index crystal (diamond, germanium, or zinc selenide). The evanescent wave penetrating the sample (typically 0.5-2 μm) provides surface-sensitive information with minimal sample preparation. ATR is suitable for analyzing liquids, solids, and thin films, with applications in polymer analysis, quality control, and environmental monitoring [13].

Reflection-Absorption Infrared Spectroscopy (RAIRS) specializes in analyzing thin films and adsorbates on reflective metal surfaces using grazing incidence geometry (80°-88° from surface normal). The technique exploits the surface selection rule where only vibrations with dipole moments perpendicular to the surface are IR-active, providing valuable information about molecular orientation. RAIRS is often combined with UHV systems for in situ surface studies and finds applications in catalysis research, self-assembled monolayer characterization, and corrosion studies [13].

Table 1: Comparison of Surface Analysis Techniques - Sampling Depth and Detection Characteristics

| Technique | Sampling Depth | Detection Limits | Lateral Resolution | Primary Information Obtained |

|---|---|---|---|---|

| XPS | 1-10 nm [13] [15] | 0.1-1 at.% [15] | 10 µm - 600 µm [15] | Elemental composition, chemical states, oxidation states [13] |

| AES | 3-10 nm [15] | 0.1-1 at.% [15] | 10 nm - 1 µm [15] | Elemental composition, surface mapping [13] |

| LEED | 0.5-2 nm [13] | N/A | ~1 mm [13] | Surface structure, crystallography, reconstruction [13] |

| SIMS/ToF-SIMS | 0.5-3 nm (static) [14] [15] | ppm-ppb [15] | 0.2-1 µm [14] [15] | Elemental and molecular composition, surface contaminants [14] |

| SERS | Single monolayer [13] | Single molecule [13] | Diffraction-limited [13] | Molecular vibrations, chemical bonding [13] |

| ATR | 0.5-2 µm [13] | ~1% [13] | ~1 mm [13] | Molecular structure, functional groups [13] |

| RAIRS | Single monolayer [13] | ~1% [13] | ~1 mm [13] | Molecular orientation, adsorbate-substrate interactions [13] |

Table 2: Vacuum Requirements and Elemental Coverage of Surface Techniques

| Technique | Vacuum Requirements | Elements Detected | Quantitative Capability | Maximum Profiling Depth |

|---|---|---|---|---|

| XPS | Ultra-high vacuum [13] | Li - U (all except H, He) [15] | Semi-quantitative [15] | ~1 µm [15] |

| AES | Ultra-high vacuum [13] | All elements [13] | Semi-quantitative [15] | ~1 µm [15] |

| LEED | Ultra-high vacuum [13] | N/A (structural technique) | No | N/A |

| SIMS/ToF-SIMS | High vacuum [14] | Full periodic table + molecular species [14] [15] | Qualitative (ToF-SIMS) [15] | 10 µm (SIMS), 500 nm (ToF-SIMS) [15] |

| SERS | Ambient or controlled atmosphere [13] | Molecular vibrations | Semi-quantitative with standards | Single monolayer |

| ATR | Ambient conditions [13] | Molecular vibrations | Semi-quantitative | 0.5-2 µm |

| RAIRS | UHV to ambient [13] | Molecular vibrations | Semi-quantitative | Single monolayer |

Experimental Methodologies and Protocols

Sample Preparation and Handling Protocols

Proper sample preparation is critical for obtaining reliable surface analysis data, particularly given the extreme sensitivity of surface techniques to contamination. The following protocols should be implemented:

Clean Handling Procedures: Samples must never be touched with bare hands on the surface to be analyzed, as this transfers salts and oils that form thick contaminant layers. Clean, solvent-rinsed tweezers should be used, contacting only non-analysis regions (e.g., sample edges) [12].

Contamination Control: Air exposure should be minimized as it deposits hydrocarbon films on surfaces—even brief exposure of a clean gold surface to air results in hydrocarbon contamination. Poly(dimethyl siloxane) (PDMS) is particularly problematic for ToF-SIMS analysis and can be transferred from air, contaminated holders, or manufacturing processes [12].

Solvent Selection: Solvent rinsing, even for cleaning purposes, can deposit contaminants or alter surface composition. Rinsing with tap water typically deposits cations (Na⁺, Ca²⁺), while solvent interactions can cause surface reorganization in multi-component systems where the lowest surface energy component becomes enriched at the surface [12].

Storage and Shipping: Appropriate containers must be selected to prevent contamination, with tissue culture polystyrene culture dishes generally being suitable options. The surfaces of storage containers should be analyzed beforehand to ensure they are contamination-free [12].

Ultra-High Vacuum (UHV) Considerations

For techniques requiring UHV conditions (XPS, AES, LEED, SIMS), special considerations apply for biological and organic samples:

Surface Rearrangement: The surface chemistry of polymers with hydrophilic and hydrophobic components can reorganize when transferred from aqueous environments to UHV, with surfaces potentially transitioning from hydrophilic enrichment in aqueous conditions to hydrophobic enrichment in UHV [12].

Structural Preservation: Biological molecules may undergo structural changes in UHV; proteins can denature and unfold when removed from their native aqueous environment. The extent of these changes depends on material properties including energetics, mobility, and structural rigidity [12].

Alternative Approaches: When UHV compatibility is problematic, non-vacuum techniques like SERS, ATR, or RAIRS may provide suitable alternatives for surface analysis under ambient or controlled conditions [13].

The following workflow diagram illustrates the decision process for selecting appropriate surface analysis techniques based on research objectives and sample properties:

Figure 2: Decision workflow for selecting appropriate surface analysis techniques based on research objectives, sample properties, and required information depth.

Multi-Technique Approach and Data Correlation

Given that no single technique provides complete surface characterization, a multi-technique approach is essential for comprehensive surface analysis:

Complementary Information: Different techniques provide different types of information from different sampling depths. XPS excels at quantifying elemental surface composition and chemical states, while ToF-SIMS offers superior sensitivity for organic and molecular species, and LEED provides structural information [12].

Consistency Validation: Results from multiple techniques must provide consistent information about the sample, though identical experimental values shouldn't necessarily be expected when sampling depths differ. For example, measuring C/O atomic ratios with techniques having different sampling depths (e.g., 2 nm vs. 10 nm) from a sample with a composition gradient will yield different values that, when properly interpreted, provide consistent information about the gradient [12].

Technique Sequencing: Initial analysis typically begins with XPS to determine surface elemental composition and identify contaminants, followed by more specialized techniques like ToF-SIMS for molecular information or LEED for structural characterization, depending on initial findings [12].

The Scientist's Toolkit: Essential Reagents and Materials

Table 3: Essential Research Reagents and Materials for Surface Analysis

| Item | Function/Application | Technical Considerations |

|---|---|---|

| ATR Crystals (Diamond, Germanium, ZnSe) | Enable evanescent wave sampling in ATR spectroscopy | Different refractive indices and chemical compatibilities; diamond is durable but expensive, Ge provides high depth resolution [13] |

| SERS Substrates (Ag, Au, Cu nanoparticles) | Enhance Raman signals via plasmonic resonance | Silver offers highest enhancement but can oxidize; gold provides better chemical stability [13] |

| Primary Ion Sources (Cs⁺, O₂⁺, Ar⁺, Ga⁺, Au⁺) | Sputter surfaces in SIMS and AES analysis | Different ions yield varying secondary ion yields; cluster ions (e.g., Ar⁺) enable organic depth profiling [14] [15] |

| Charge Compensation Flood Guns | Neutralize surface charging on insulating samples | Essential for analyzing non-conductive samples with electron or ion beams in XPS, AES, and SIMS [16] |

| UHV-Compatible Sample Holders | Secure samples during analysis in vacuum | Must be constructed of materials with low vapor pressure to maintain vacuum integrity [12] |

| Reference Standards | Quantification and instrument calibration | Certified reference materials with known surface composition essential for quantitative analysis, particularly in SIMS [14] [15] |

| Cryogenic Sample Stages | Preserve volatile compounds and biological structure | Enable analysis of semi-volatile materials in vacuum by reducing vapor pressure [14] |

| Sputter Ion Guns (Ar⁺, C₆₀⁺) | Depth profiling and surface cleaning | Used in conjunction with XPS and AES for layer-by-layer analysis; cluster ions preserve molecular information [15] |

Advanced Applications and Emerging Directions

Depth Profiling Methodologies

Depth profiling extends surface analysis into the third dimension, providing crucial information about layer structures, diffusion processes, and interfacial phenomena. The primary methodologies include:

Sputter-Based Depth Profiling: Techniques like XPS, AES, and SIMS combine surface analysis with sequential material removal using ion sputtering (typically Ar⁺, Cs⁺, or O₂⁺ ions). XPS and AES provide semi-quantitative depth profiles with approximately 1 µm maximum profiling depth, while SIMS offers greater depth resolution and can profile up to 10 µm [15]. Recent advances in cluster ion beams (e.g., C₆₀⁺, Arₙ⁺) have significantly improved the ability to profile organic materials while maintaining molecular structural information [14].

Non-Destructive Depth Profiling: Rutherford Backscattering Spectrometry (RBS) and Time-of-Flight Elastic Recoil Detection Analysis (ToF-ERDA) provide depth information without sputtering by measuring energy loss of backscattered ions. RBS offers excellent sensitivity for heavy elements (ppm range) with 5-15 nm probing depth, while ToF-ERDA can detect all elements, including hydrogen and its isotopes, with similar depth resolution [15].

Glow Discharge Techniques: Glow Discharge Optical Emission Spectroscopy (GD-OES) combines sputtering and excitation in a single plasma step, enabling rapid depth profiling (µm/min) without requiring UHV conditions. GD-OES provides quantitative elemental composition with 3 nm depth resolution and can profile up to 150 µm deep, making it particularly valuable for thick coating analysis [15] [16].

Biological and Pharmaceutical Applications

Surface analysis techniques face particular challenges when applied to biological and pharmaceutical systems, where samples are often complex, fragile, and require aqueous environments:

Biomaterial Characterization: XPS and ToF-SIMS are extensively used to characterize biomaterial surfaces, quantifying elemental composition and detecting molecular species at interfaces. These techniques help understand protein adsorption, cell attachment, and tissue integration mechanisms [12].

Drug Delivery Systems: Surface analysis provides critical information about drug distribution in carrier systems, surface functionalization of nanoparticles, and coating integrity of controlled-release formulations. ToF-SIMS imaging excels at mapping the lateral distribution of active pharmaceutical ingredients on particle surfaces [14].

Medical Device Analysis: Understanding surface chemistry of implants, catheters, and diagnostic devices is essential for predicting biological responses. Multi-technique approaches combining XPS, ToF-SIMS, and ATR provide comprehensive characterization of surface modifications, contaminant identification, and stability assessment [12].

The continuing development of surface analysis techniques focuses on improving sensitivity, spatial resolution, and the ability to characterize complex biological systems under native conditions. The integration of multiple techniques remains essential for comprehensive surface characterization, with correlative approaches that combine information from different methods providing the most complete understanding of surface properties and behaviors.

In materials science and engineering, the surface is defined as the outermost layer of a solid material that interacts with its environment, typically the interface between a solid and a fluid (liquid or gas) [17] [18]. This region, often just three to five atomic layers thick (1-2 nm), plays a disproportionately critical role in determining material performance and functionality [19]. While bulk properties define general material categories, surface properties ultimately dictate how materials behave in practical applications, influencing characteristics as diverse as corrosion resistance, adhesion, catalytic activity, and biocompatibility [19] [17].

The significance of surfaces becomes particularly pronounced as material dimensions decrease. In nanomaterials, where the surface-to-volume ratio increases dramatically, surface properties can dominate overall material behavior [17]. This fundamental understanding has driven the development of specialized surface chemical analysis techniques that can probe the top few nanometers of materials, providing crucial information for both research and quality control across numerous industries [19] [17].

Surface-Induced Phenomena and Mechanisms

Corrosion: Surface Degradation Mechanisms

Corrosion represents one of the most economically significant surface-mediated phenomena, involving electrochemical reactions at the material-environment interface. In fluorine atmospheres, metallic materials undergo fluorination reactions that initially form protective fluoride scales on the surface [20]. However, under extreme conditions, these scales become unstable, leading to breakdown and exfoliation that exposes fresh substrate to further attack [20]. This process demonstrates the crucial protective function of engineered surfaces.

Recent research on orthodontic brackets illustrates how surface composition affects corrosion behavior. When exposed to simulated gastric acid (pH 1.5-3.0), metal and self-ligating brackets released significantly more nickel (Ni) and chromium (Cr) ions compared to ceramic brackets [21]. This ion release peaked at 24 hours before decreasing after one month, demonstrating how surface passivation can evolve over time [21]. The accompanying increase in surface roughness further evidenced the degradation processes occurring at the interface [21].

Table 1: Ion Release and Surface Roughness of Different Brackets in Acidic Conditions (pH 1.5)

| Bracket Type | Maximum Ni Release (24h) | Maximum Cr Release (24h) | Surface Roughness (Ra) | Time of Maximum Roughness |

|---|---|---|---|---|

| Metal (M) | High | Moderate | Highest | 24 hours |

| Self-Ligating (SL) | High | Highest | High | 24 hours |

| Ceramic (C) | Lowest | Lowest | Lowest | 24 hours |

Adhesion: Interfacial Bonding Forces

Adhesion science explores the forces that bind different materials at their interfaces. At the nanoscale, these interactions display remarkable complexity. Recent investigations into shale organic matter revealed that adhesion properties correlate positively with surface electrical characteristics [22]. Using atomic force microscopy (AFM) and Kelvin probe force microscopy (KPFM), researchers discovered that surface potential of organic matter shifts from negative in dry states to positive under water-wet and water-ScCO₂ conditions, directly influencing adhesive behavior [22].

This relationship between surface chemistry and adhesion has profound implications for industrial processes and product development. For example, the inhomogeneous distribution of functional groups creates chemical heterogeneity on surfaces, leading to "patchy wetting" and varied surface energy distributions that control adhesion performance [22]. Understanding these nanoscale interactions enables the design of surfaces with tailored adhesive properties for applications ranging from medical devices to aerospace components.

Catalysis: Surface-Mediated Reaction Acceleration

Heterogeneous catalysis relies entirely on surface phenomena, where reactions occur at the interface between solid catalysts and fluid reactants. The specific surface area (SSA) of a catalyst directly determines the number of accessible active sites, profoundly influencing reaction rates [23]. This principle was elegantly demonstrated using cobalt spinel (Co₃O₄) catalysts with varying SSA for hydrogen peroxide decomposition [23].

Catalyst performance depends critically on both the number and nature of active sites - specific surface atoms or groups where catalytic transformations occur [23]. As catalyst dimensions decrease, the proportion of surface atoms increases, enhancing potential activity. This explains the intense interest in nanocatalysts and two-dimensional materials like graphene, which represent the ultimate surface-dominated systems [23] [17].

Table 2: Catalyst Specific Surface Area vs. Reaction Performance

| Calcination Temperature (°C) | Specific Surface Area (m²/g) | Catalytic Activity | Reaction Rate Constant |

|---|---|---|---|

| 300 | Highest | Highest | Highest |

| 400 | High | High | High |

| 500 | Moderate | Moderate | Moderate |

| 600 | Lowest | Lowest | Lowest |

Biocompatibility: The Biological-Surface Interface

In medical applications, surface properties determine biological responses to implants and devices. The biocompatibility of materials depends critically on surface characteristics that mediate interactions with biological systems [24] [21]. For orthodontic brackets, surface composition affects ion release that can trigger allergic reactions or tissue inflammation [21]. Similarly, titanium alloys used in orthopedic and dental applications require surface modifications to enhance biocompatibility while maintaining desirable bulk properties [18].

The medical device industry addresses these challenges through rigorous biological safety evaluations that assess surface-mediated interactions [24]. These evaluations examine how device materials interact with biological systems through their surfaces, analyzing extractables and leachables that migrate from the material surface into surrounding tissues [24]. Understanding these surface phenomena enables the development of safer, more effective medical devices with optimized biological responses.

Advanced Surface Analysis Techniques

Modern surface science relies on sophisticated analytical techniques that probe the top few nanometers of materials. The most widely used methods include:

X-ray Photoelectron Spectroscopy (XPS/ESCA): Measures kinetic energy of photoelectrons ejected by X-ray irradiation, providing quantitative, surface-specific chemical state information with a sampling depth of approximately 10 nm [19] [17].

Auger Electron Spectroscopy (AES): Uses focused electron beams to excite electron transitions, analyzing resulting Auger electrons to determine elemental composition of the top few atomic layers [19].

Secondary Ion Mass Spectrometry (SIMS): Employs focused ion beams to sputter atoms and molecules from the outermost atomic layer, then analyzes them by mass spectrometry for extremely surface-sensitive characterization [19].

Atomic Force Microscopy (AFM) and Kelvin Probe Force Microscopy (KPFM): Scanning probe techniques that measure surface morphology, adhesion forces, and surface potential at nanoscale resolution [22].

These techniques often complement each other, with combinations like XPS and SIMS providing comprehensive surface elemental mapping and chemical state quantification [19]. For thin-film characterization, these surface analysis methods can be combined with sputter depth profiling to determine composition as a function of depth from the original surface [17].

Diagram 1: Surface analysis techniques and their applications in studying material interfaces. Techniques provide complementary information about surface properties that determine performance in key application areas.

Experimental Approaches and Methodologies

Nanoscale Adhesion and Surface Potential Measurements

Understanding adhesion at the nanoscale requires sophisticated measurement techniques. Recent research on shale organic matter exemplifies a comprehensive approach using AFM and KPFM to correlate adhesion properties with surface electrical characteristics [22]:

Sample Preparation: Shale samples from the Longmaxi Formation with high organic content (2.61 wt% TOC) were prepared. The mineral composition was characterized using X-ray diffraction [22].

Experimental Conditions:

- Dry state measurements

- Water-wet conditions

- Water-ScCO₂ treatments (3h and 12h exposures)

Measurement Protocol:

- AFM viscoelastic mapping to measure adhesion forces through force-displacement curves

- KPFM measurements to determine corresponding surface potential changes

- Spatial correlation analysis between adhesion and surface potential

- Statistical analysis of multiple measurement areas to assess heterogeneity

This methodology revealed that adhesion properties exhibit a positive linear correlation with surface electrical properties, with both parameters showing significant spatial heterogeneity at the nanoscale due to uneven distribution of oxygen-containing functional groups [22].

Corrosion Monitoring in Aggressive Environments

Evaluating material performance in corrosive environments requires careful experimental design. A study on orthodontic brackets demonstrates a systematic approach to corrosion assessment [21]:

Test Materials:

- Metal brackets (M)

- Self-ligating brackets (SL)

- Ceramic brackets (C)

Solution Preparation:

- Artificial saliva (pH 7.0): 7.69 g/L dipotassium phosphate, 2.46 g/L dipotassium hydrogen phosphate, 5.3 g/L sodium chloride, 9.3 g/L potassium chloride, pH adjusted with lactic acid [21]

- Simulated gastric acid (pH 1.5 and 3.0): 2.0 g NaCl, 3.2 g pepsin, 7.0 mL HCl, diluted with water to required pH [21]

Exposure Protocol:

- Incubation at 37°C for 30 min, 24 h, and 1 month

- Sample size: n = 22 for each time interval

- Airtight glass tubes with 1.5 mL solution volume

Analysis Methods:

- ICP-MS for quantitative measurement of Ni and Cr ion release

- Optical profilometry for surface roughness measurements (Zeiss Axio CSM700)

- SEM for surface morphology examination at 1000× magnification

This comprehensive approach revealed that ion release peaked at 24 hours before decreasing, while surface roughness showed parallel changes, indicating complex time-dependent corrosion and passivation behavior [21].

Catalyst Specific Surface Area Demonstration

A educational demonstration illustrates the critical relationship between catalyst specific surface area and reaction rate [23]:

Catalyst Preparation:

- Synthesis of cobalt carbonate precursor from cobalt nitrate solution

- Precipitation using sodium carbonate solution until pH 9

- Washing and drying at 60°C for 2+ hours

- Calcination at 300°C, 400°C, 500°C, and 600°C for 2 hours to produce Co₃O₄ with varying SSA

Demonstration Protocol:

- Preparation of detergent solution (10 mL detergent per 100 mL water)

- Addition of 10 mL detergent solution to four 250 mL graduated cylinders

- Introduction of 0.25 g Co₃O₄ calcined at different temperatures to each cylinder

- Simultaneous addition of 5 mL of 10% w/w hydrogen peroxide to all cylinders

- Video recording of foam formation for quantitative analysis

Measurement and Analysis:

- Video tracking software (CMA Coach 6) to measure foam volume versus time

- Specific surface area measurement by nitrogen adsorption at liquid nitrogen temperature

- Kinetic analysis to determine reaction rate constants

This experiment visually demonstrates that catalysts with higher SSA produce faster reaction rates, providing quantitative data that can be used for comparative kinetic analysis [23].

Table 3: Research Reagent Solutions for Surface Science Experiments

| Reagent/Material | Function/Application | Technical Specifications | Key Experimental Considerations |

|---|---|---|---|

| Cobalt Spinel (Co₃O₄) | Heterogeneous Catalyst | Variable specific surface area (controlled by calcination temperature) | Higher SSA increases active sites and reaction rate [23] |

| Artificial Saliva | Corrosion Testing Medium | pH 7.0, contains electrolytes mimicking oral environment | Standardized medium for biomedical corrosion studies [21] |

| Simulated Gastric Acid | Accelerated Corrosion Testing | pH 1.5-3.0, contains pepsin and HCl | Represents severe gastroesophageal reflux conditions [21] |

| Hydrogen Peroxide (10% w/w) | Model Reaction Substrate | Decomposition reaction: 2H₂O₂ → 2H₂O + O₂(g) | Oxygen formation visually indicates catalytic activity [23] |

| Shale Organic Matter | Nanoscale Interface Studies | High TOC (2.61 wt%), complex mineral composition | Requires AFM/KPFM for nanoscale property mapping [22] |

Emerging Trends and Future Directions

Surface science continues to evolve with new challenges and opportunities. In corrosion protection, research focuses on developing advanced fluorine-resistant alloys and innovative protection strategies, including novel coatings and surface modifications for extreme environments [20]. The incorporation of rare earth elements shows particular promise for enhancing corrosion resistance in aggressive fluorine atmospheres [20].

Interdisciplinary approaches are advancing adhesion science, with upcoming research conferences highlighting themes like "Adhesion in Extreme and Engineered Environments" and "Merging Humans and Machines with Adhesion Science" [25]. These efforts aim to bridge fundamental understanding with practical applications across diverse fields.

In biomedical applications, surface characterization plays an increasingly crucial role in biological safety evaluations of medical devices [24]. Emerging approaches include comprehensive workflows for assessing data-poor extractables and leachables using in vitro data and in silico approaches, enhancing patient safety while streamlining regulatory processes [24].

Diagram 2: Emerging research directions in surface science. Advanced analysis techniques and modeling approaches enable new applications in energy, manufacturing, and environmental technologies.

Surface phenomena fundamentally control material behavior across virtually all application domains. From corrosion initiation at the thinnest surface layers to catalytic activity determined by specific surface area, from adhesion forces operating at the nanoscale to biocompatibility mediated by surface chemistry, the interface between a material and its environment dictates performance and reliability. Advanced surface analysis techniques continue to reveal the complex relationships between surface composition, structure, and functionality, enabling the rational design of materials with tailored surface properties. As materials science progresses toward increasingly sophisticated applications, from nanostructured devices to extreme environment operation, understanding and engineering surfaces will remain paramount for technological advancement.

Essential Surface Analysis Techniques and Their Biomedical Applications

Surface science is a critical field that investigates physical and chemical phenomena occurring at the interfaces between different phases, such as solid-vacuum, solid-liquid, and solid-gas boundaries [26]. Within this domain, secondary ion mass spectrometry (SIMS) has emerged as a powerful analytical technique for characterizing surface composition with exceptional sensitivity. The time-of-flight (ToF) variant, ToF-SIMS, represents a dominant experimental approach that combines high surface sensitivity, exceptional mass resolution, and the capability for both lateral and spatial chemical imaging [27] [28]. This technique provides elemental, chemical state, and molecular information from the outermost surface layers of solid materials, with an average analysis depth of approximately 1-2 nanometers [28]. The fundamental principle involves bombarding a sample surface with a pulsed primary ion beam, which causes the emission of secondary ions and ion clusters that are subsequently analyzed by their mass-to-charge ratio using a time-of-flight mass analyzer [28] [29].

The analytical capabilities of ToF-SIMS have expanded significantly since its early applications in inorganic materials and semiconductors. Initially developed for these fields, ToF-SIMS has transformed into a versatile tool with increased applications in biological, medical, and environmental research [27]. This transition has been facilitated by ongoing instrumental developments that have enhanced its molecular analysis capabilities, particularly for organic materials. Today, ToF-SIMS serves as a powerful technique for molecular surface mapping across diverse research fields, from battery technology to drug development and environmental science [27] [30] [31].

Fundamental Principles of TOF-SIMS

Core Operating Mechanism

The operational principle of ToF-SIMS relies on the interaction between a pulsed primary ion beam and the sample surface. When primary ions with energies typically ranging from 5 to 40 keV strike the surface, they initiate a collision cascade within the top few nanometers of the material [29]. This process leads to the desorption and emission of various species from the surface, including atoms, molecule fragments, and intact molecular ions, collectively termed secondary ions [29]. The secondary ions are then extracted into a time-of-flight mass analyzer, where they are separated based on their mass-to-charge ratio (m/z) according to the fundamental relationship expressed in Equation 1:

Equation 1: Time-of-Flight Relationship

Where T represents the flight time, D is the flight path length, M is the mass-to-charge ratio, and KE is the kinetic energy of the ion [29]. Since most secondary ions carry a single charge, M effectively corresponds to the mass of the ion, enabling precise mass determination.

A defining characteristic of ToF-SIMS is the static limit, typically defined as an ion dose of 1 × 10^13 ions/cm² for organic materials [29]. Operating below this limit ensures that the primary ion bombardment does not significantly damage the surface chemistry being analyzed, thereby preserving the integrity of the molecular information obtained. Most analyses are conducted at or below 1 × 10^12 ions/cm² to remain well within this static regime [29].

Comparison with Other Surface Analysis Techniques

ToF-SIMS occupies a unique position within the landscape of surface analysis techniques, offering distinct advantages and limitations compared to other methods.

Table 1: Comparison of Surface Analysis Techniques

| Technique | Information Obtained | Analysis Depth | Lateral Resolution | Strengths | Limitations |

|---|---|---|---|---|---|

| ToF-SIMS | Elemental, molecular, chemical state | ~1-2 nm [28] | <0.1 µm [28] | High surface sensitivity, molecular information, high mass resolution | Complex spectra interpretation, matrix effects |

| XPS | Elemental, chemical state | 2-10 nm [27] | 3-10 µm | Quantitative, chemical bonding information | Limited molecular information, lower spatial resolution |

| SEM/EDS | Elemental | 1-3 µm [28] | ~1 µm | Rapid elemental analysis, high spatial resolution | Limited to elemental information, deeper sampling volume |

| AFM | Topography, mechanical properties | Surface topography | <1 nm | Excellent vertical resolution, measures physical properties | No direct chemical information |

| Raman | Molecular vibrations, crystal structure | 0.5-2 µm | ~0.5 µm | Non-destructive, chemical bonding information | Limited surface sensitivity, fluorescence interference |

Unlike bulk analysis techniques such as gas chromatography-mass spectrometry (GC-MS) or liquid chromatography-mass spectrometry (LC-MS), which require complex sample preparation and extraction procedures, ToF-SIMS offers simple sample preparation and enables direct analysis of surfaces without extensive pretreatment [27]. While spectroscopic techniques like Fourier transform infrared spectroscopy (FTIR) and Raman microscopy provide information about chemical bonds and functional groups, they have limitations in molecular analysis that ToF-SIMS can overcome through its high mass resolution and sensitivity [27].

Technical Components and Methodologies

A typical ToF-SIMS instrument consists of several key components: an ultra-high vacuum (UHV) system maintaining pressures between 10⁻⁸ and 10⁻⁹ mbar, primary ion sources, a sample stage, a time-of-flight mass analyzer, and a detector system [29]. The choice of primary ion source significantly influences the type and quality of information obtained, with different sources offering distinct advantages for specific applications.

Table 2: Common Primary Ion Sources in ToF-SIMS

| Ion Source Type | Examples | Typical Energy | Best For | Key Characteristics |

|---|---|---|---|---|

| Liquid Metal Ion Guns (LMIG) | Bi⁺, Bi₃⁺, Auₙ⁺ | 10-30 keV | High spatial resolution imaging | High brightness, small spot size |

| Cesium Ion Sources | Cs⁺ | 1-15 keV | Depth profiling, negative ion yield | Enhances negative ion yield via surface reduction |

| Gas Cluster Ion Beams (GCIB) | Ar₁₅₀₀⁺, (CO₂)ₙ⁺ | 5-20 keV (total) | Organic depth profiling, minimal damage | Low energy per atom, reduced fragmentation |

| Oxygen Ion Sources | O₂⁺ | 0.5-5 keV | Positive ion yield, depth profiling | Enhances positive ion yield via surface oxidation |

The development of gas cluster ion beams (GCIB) using large clusters of atoms (e.g., Ar₁₅₀₀⁺ with energies of 5-10 keV) has been particularly transformative for organic and molecular analysis [31] [29]. These clusters distribute their total energy among many atoms, resulting in very low energy per atom (e.g., 3.33 eV for 5 keV Ar₁₅₀₀⁺) and consequently causing significantly less fragmentation and damage to molecular species compared to monatomic ions [31]. This advancement has enabled high-resolution depth profiling of organic materials and fragile biological samples that was previously challenging with conventional ion sources.

Operational Modes and Data Acquisition

ToF-SIMS operates in three primary data acquisition modes, each offering distinct analytical capabilities:

Surface Spectral Analysis: This mode provides detailed chemical characterization of specific points on a sample surface. High mass resolution (m/Δm > 10,000) enables discrimination between ions with very similar masses, allowing precise molecular identification [27] [29]. Spectra can be acquired in both positive and negative ion modes, often providing complementary information about the surface composition.

Imaging Mode: By scanning a microfocused ion beam across the sample surface, ToF-SIMS can generate chemical images with sub-micrometer spatial resolution (below 100 nm in modern instruments) [28] [29]. This capability enables the visualization of chemical distributions across a surface, revealing heterogeneity, contaminants, or domain structures that would be impossible to detect with bulk analysis techniques.

Depth Profiling: Combining ToF-SIMS analysis with continuous sputtering using a dedicated ion source enables the characterization of thin film structures and interfaces in the z-direction [28] [31]. This powerful mode provides three-dimensional chemical characterization, with depth resolution reaching below 10 nm in organic materials [29]. The choice of sputter ion significantly impacts depth resolution and the preservation of molecular information, with argon clusters generally preferred for organic materials due to reduced fragmentation [31].

Experimental Design and Protocols

Sample Preparation Considerations

Proper sample preparation is critical for successful ToF-SIMS analysis, particularly for sensitive or reactive materials. The specific approach varies significantly depending on the sample type and analytical objectives:

For environmental samples such as aerosols, soils, and plant materials, careful collection and minimal pretreatment are often necessary to preserve native surface chemistry [27]. Atmospheric aerosol particles may be collected on specialized substrates, while plant tissues might require cryo-preparation to maintain metabolic state and spatial distribution of compounds.

Analysis of reactive materials such as lithium metal electrodes in battery research demands stringent protocols to prevent surface alteration before analysis. The use of Ar-filled transfer vessels provides an inert atmosphere during sample transfer, ensuring an unimpaired sample surface for analysis [31].

Biological tissues typically require specialized preparation, including cryo-preservation, thin sectioning, and appropriate substrate selection to maintain cellular integrity and molecular distributions during analysis under ultra-high vacuum conditions [29].

Optimized Sputter Ion Selection for Depth Profiling

Depth profiling requires careful selection of sputter ion parameters to balance depth resolution, measurement time, and preservation of molecular information. Recent research on lithium metal surfaces with solid electrolyte interphase (SEI) layers provides valuable insights into optimal conditions:

Table 3: Sputter Ion Comparison for Depth Profiling

| Sputter Ion | Energy Parameters | Advantages | Limitations | Optimal Applications |

|---|---|---|---|---|

| Ar₁₅₀₀⁺ (Cluster) | 5 keV total (∼3.33 eV/atom) | Minimal fragmentation, preserves molecular information | Lower sputter yield, longer measurement times | Organic layers, polymers, battery SEI [31] |

| Cs⁺ (Monatomic) | 0.5-2 keV | Enhanced negative ion yield, higher sputter rate | Causes significant fragmentation, surface reduction | Inorganic materials, elemental depth profiling [31] |

| Ar⁺ (Monatomic) | 0.5-2 keV | Balanced sputter rate, compatible with both polarities | Moderate fragmentation, no yield enhancement | General purpose, mixed organic-inorganic systems [31] |

A comparative study on lithium metal sections with SEI layers demonstrated that Ar₁₅₀₀⁺ cluster ions at 5 keV with a sputter ion current of 500 pA provided optimal preservation of molecular information, though with lower sputter rates requiring longer measurement times [31]. In contrast, Cs⁺ ions offered higher sputter rates but induced significant fragmentation, making them more suitable for elemental analysis than molecular characterization.

Essential Research Reagent Solutions

Successful ToF-SIMS analysis requires specific materials and reagents tailored to different sample types and analytical goals.

Table 4: Essential Research Reagents and Materials for ToF-SIMS

| Reagent/Material | Function/Purpose | Application Examples |

|---|---|---|

| Conductive Substrates (Si, Au, ITO) | Provides grounding, minimizes charging, enhances secondary ion yield | Insulating samples (polymers, biological tissues) |